Evaluating DeFlaker

We performed an extensive evaluation of DeFlaker by re-running historical builds for 26 open source Java projects, executing a total of over 47,000 builds, taking over five CPU-years. We also deployed DeFlaker live on Travis CI, integrated into the builds of 96 projects. A complete description of the extensive evaluations we completed are available in our ICSE 2018 paper.

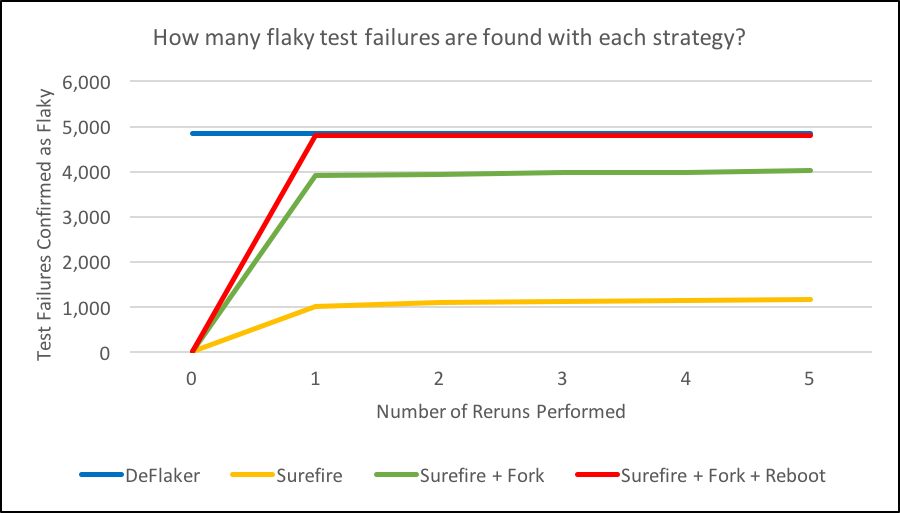

Our primary goal was to evaluate how many flaky tests DeFlaker would find, in comparison to a traditional (rerun) strategy. For each build, when we saw a test fail, we re-ran it. At first, we considered only the rerun approach implemented by Maven’s Surefire test runner, which reruns each failed test in the same JVM in which it originally failed. We were surprised to find that this approach only resulted in 23% of test failures eventually passing (hence, marked as flaky) even if we allowed for up to five reruns!

The strategy by which test is rerun matters greatly: make a poor choice, and the test will continue to fail for the same reason that it failed the first time. Hence, to achieve a better oracle of test flakiness, and to understand how to best use reruns to detect flaky tests, we experimented with the following strategies, rerunning failed tests: (1) Surefire: up to five times in the same JVM in which the test ran (Maven’s rerun technique); then, if it still did not pass; (2) Fork: up to five times, with each execution in a clean, new JVM; then, if it still did not pass; (3) Reboot: up to five times, running a mvn clean between tests and rebooting the machine between runs.

Finding Flaky Tests

We compared the number of flaky tests found with each of these strategies to the flaky tests found by DeFlaker (on those same executions), finding that DeFlaker found nearly as many flaky tests as the most expensive strategy (reboot), and far more than the other strategies.

Runtime Overhead

We demonstrated that DeFlaker was fast by calculating the time overhead of applying DeFlaker to the ten most recent builds of each of those 26 projects. We compared DeFlaker to state-of-the-art tools: the regression test selection tool Ekstazi, and the code coverage tools, JaCoCo, Cobertura and Clover. Overall, we found that DeFlaker was very, very fast, often imposing an overhead of less than 5% — far faster than the other coverage tools that we looked at.

[table id=1 /]